By:: Karl Rubin, COO

Data management for an enterprise used to be static, top-down, and fragmented. But not anymore. It turns modular, real time, dynamic, decentralized, and complex as data spreads into every corner of an enterprise’s existence. That’s why old models and approaches just won’t work anymore. We need something more streamlined, synchronized, and agile. We need DataOps.

What is DataOps?

DataOps is nothing but the dissolving of barriers and siloes across the data pipeline. It is a collaborative platform where all people, processes, and technology used to manage data efficiently and delivery functions can come under a common roof. It is like DevOps for data processes and practices.

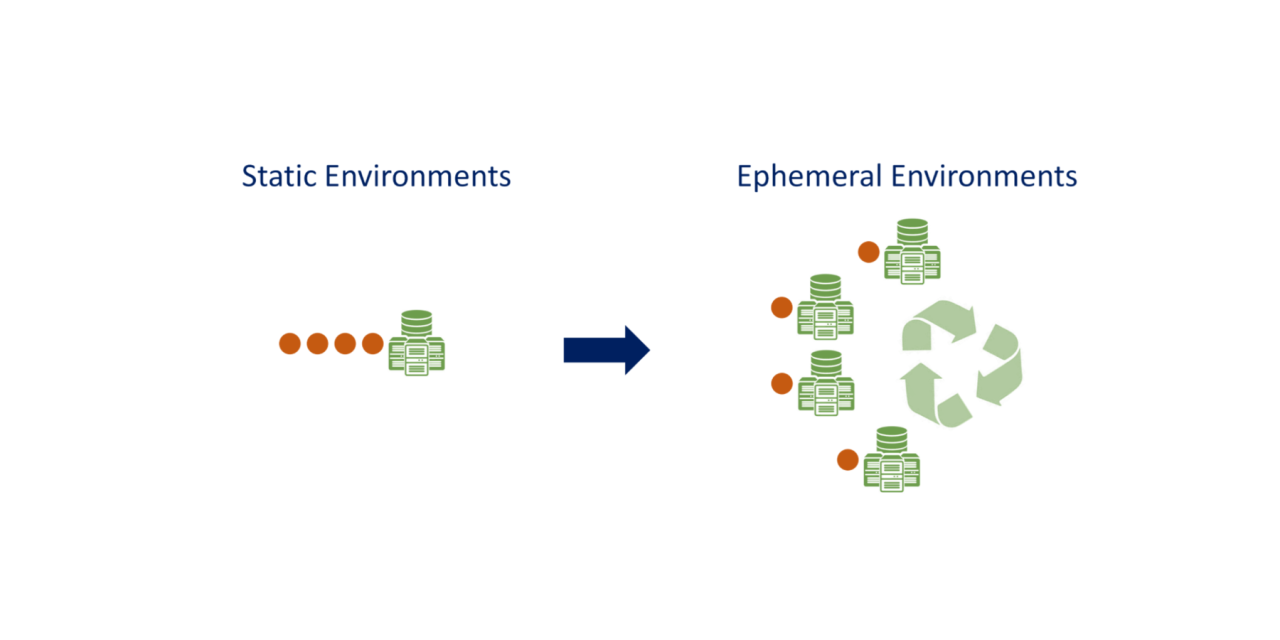

A new data paradigm brings efficiency, speed, and agility to the end-to-end data pipelines process, from collection to delivery. This is done with a DataOps strategy that standardizes and automates software delivery. Here, all the parts of a software stack are covered – specifically those related to data – from the application layer to the database and data layers. Everything is aligned and in sync now.

Why DataOps now? – Impact and Relevance

According to The 2020 Data Attack Surface Report, total global data storage would exceed 200 zettabytes by 2025—and half of it would be stored in the cloud. How can this massive spread and scale of data be managed without losing direction, purpose, and control? Also, consider a survey of data professionals from Nexla regarding how they use data, their team structure, and data challenges – it was noted that 73 percent of companies are investing in DataOps. It makes sense. Because orchestrating, safeguarding, processing so much data cannot be done with conventional models. More so, like Machine Learning, Cloud, and Artificial Intelligence are redefining how we look at data. The advent of real-time applications and cloud-driven operational complexity also speed up the need to move towards DataOps. Automating, orchestrating, and managing a disparate array of data sources would be possible only with DataOps.

Data challenges and Implementing DataOps

Three areas can make or break any DataOps strategy – people, processes, and technology. As per IDC’s Data-to-Insights pipeline, it is important to address these areas in a prudent and focused manner – Identify Data, Gather Data, Transform Data, and Analyze Data. A high level of data discipline would also be needed to address ‘data debt’, which is a massive challenge for companies carrying the burden of legacy, wasted data engineering, data science, and analytics efforts.

The Roles and People behind DataOps

Non-data people and decision-makers would become less dependent on data teams to get the insights they need in a DataOps environment. The suppliers of data and insights would not have the same routines anymore. We would see the emergence of a new breed of data stewards, facilitators, and architects of the data ecosystems.

DataOps Framework

This aspect comprises some pertinent pillars like:

- Orchestration platform

- Automated testing and alerts

- Deployment automation

- Development sandbox

- Data science model

- Distributed computing

- Data integration and unification

- Cloud enablement

How is DataOps Empowering the Future of Data Management?

When DataOps is implemented , it helps streamline processes so that data moves along the pipeline more efficiently and much more quickly than before. This speeds up and elevates the quality of insights for business, so eliminating inefficient and disconnected teams, data sources, and processes is crucial. This can be done through next generation tools, dedicated data engineers, and scientists. It would need a fresh approach and the mindset of an Autonomous Digital Enterprise.

The proliferation of data has ensured that old approaches will fail to master big data on a single platform. The one-vendor and one-platform landscape cannot work anymore and not so without a practical DataOps approach in place.

A good platform enables the interoperability of many data components. It helps to align architecture and streamline processes to manage data and delivery.

An excellent tool can be handy when you need to support your DevOps team in planning, designing, and executing a comprehensive DataOps strategy. You need to invest in a solution that truly helps you align your efforts across your DevOps pipeline. Bonus points if such a solution is scalable and orchestrates the software release process from development to production.

Look for these minor things before you embrace the gigantic wave of DataOps.